Artificial Intelligence: Acceleration & Optimization

Artificial Intelligence and Machine Learning (AI/ML) are hot topics that have deep implications for businesses, and IT executives are challenged to stay ahead of their increasing data accumulation and needs for storage and access.

What has traditionally moved from on-premise to cloud has evolved into a hybrid cloud platform, and cloud costs are skyrocketing because not enough time is spent on how to migrate data for optimization of storage, network, and compute. Equally important is the sophistication of these hardware/software combinations and the degree of expertise required to design, implement, and deploy within a system that is already live.

The best way to incorporate all the requirements of AI and to formulate a data optimization strategy is to find a technology partner:

- with whom there is a trustworthy relationship

- that has ongoing access to subject matter experts

- with experience in organizations of comparable size

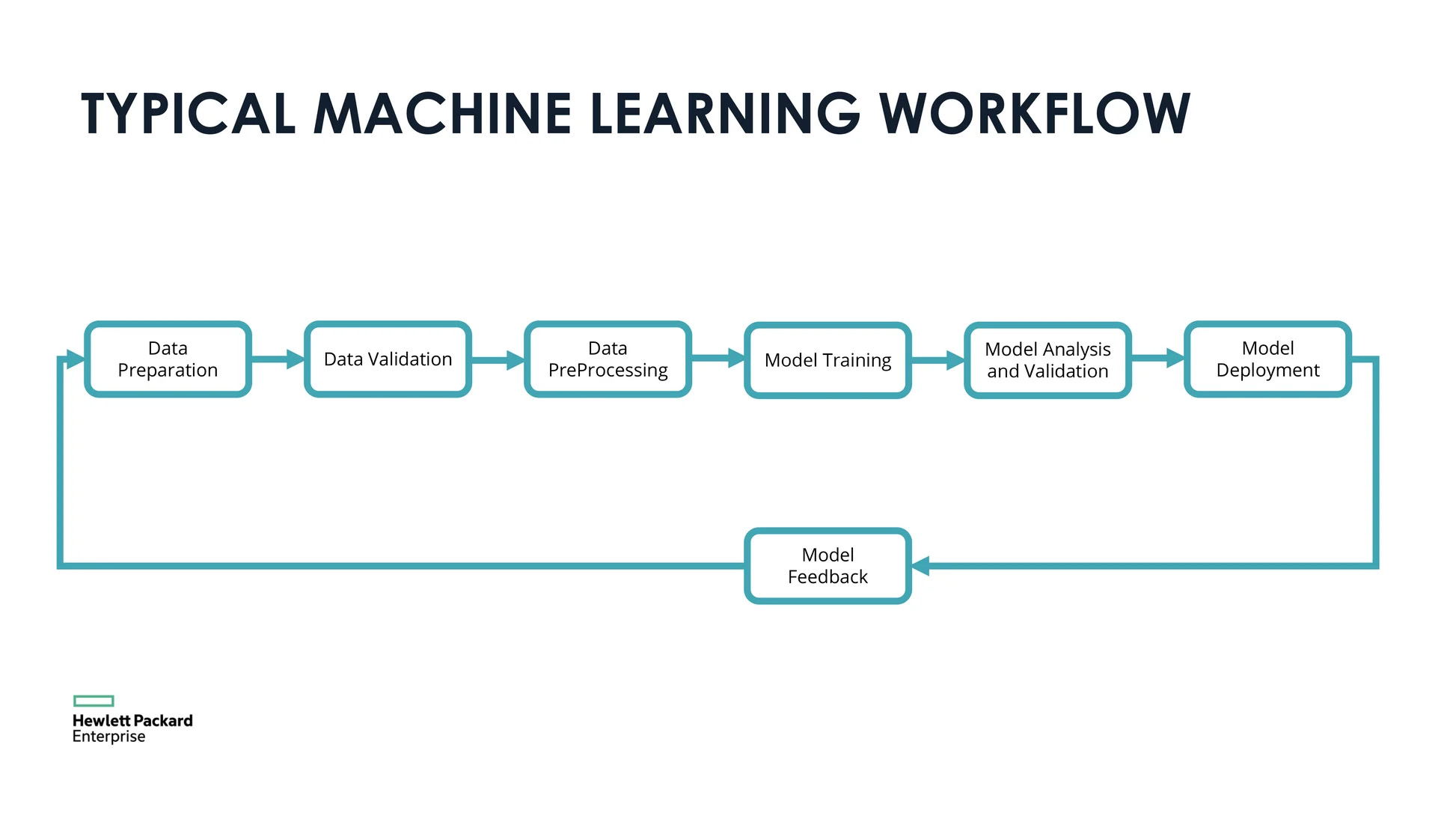

The partner should reinforce that starting with a data deployment model, which might seem obvious, is less preferable than assessing where the data lives and how it is used. The best-deployed models are built where most of that data lives. The partner should also know that the best AI learning comes with incorporation of more, relevant data, not with new and different models.

When analyzing data, start with performance and proximity, according to Brittany Marley, Senior Client Executive at Equinix. Is the data in the social media sector vs the banking sector? Does data need to be accessed from multiple locations around the world? Where is data located in context of the business ecosystem? How latency-sensitive is the data? Understanding answers to these questions helps determine the necessary bandwidth, flexibility, and agility that a company needs. Additionally, it’s important to address security requirements and to be prepared for expected sustainability reporting; power infrastructure must be designed to optimize energy-spend as data needs change over time.

Miro Hodak, Senior Member of Technical Staff for AI/ML Solutions Architecture at AMD emphasizes that data processing power for AI is the result of a combination of algorithms, big data, and compute. From a hardware perspective, it is important to know when to use GPUs and CPUs. GPUs are for AI and time-sensitive training in straightforward applications, whereas 80% of inferences happen on CPUs. The assessment and utilization of GPU vs CPU with appropriate 2-way APIs (Application Programming Interfaces) provide compelling performances and a lower TCO (Total Cost of Ownership).

For organizations where CPU inferencing is an area of differentiation, like in neural networks, quantization affords faster iterative learning, better optimization, and reduced power consumption. Shares Jay Marshall, Vice President of Business Development for Neural Magic, by allowing access to smaller CPUs, there is a reduction of precision without significant impact to accuracy. For example, a ResNet-50 can be quantized from 32 down to 2 bits, and it only drops 3% in accuracy.

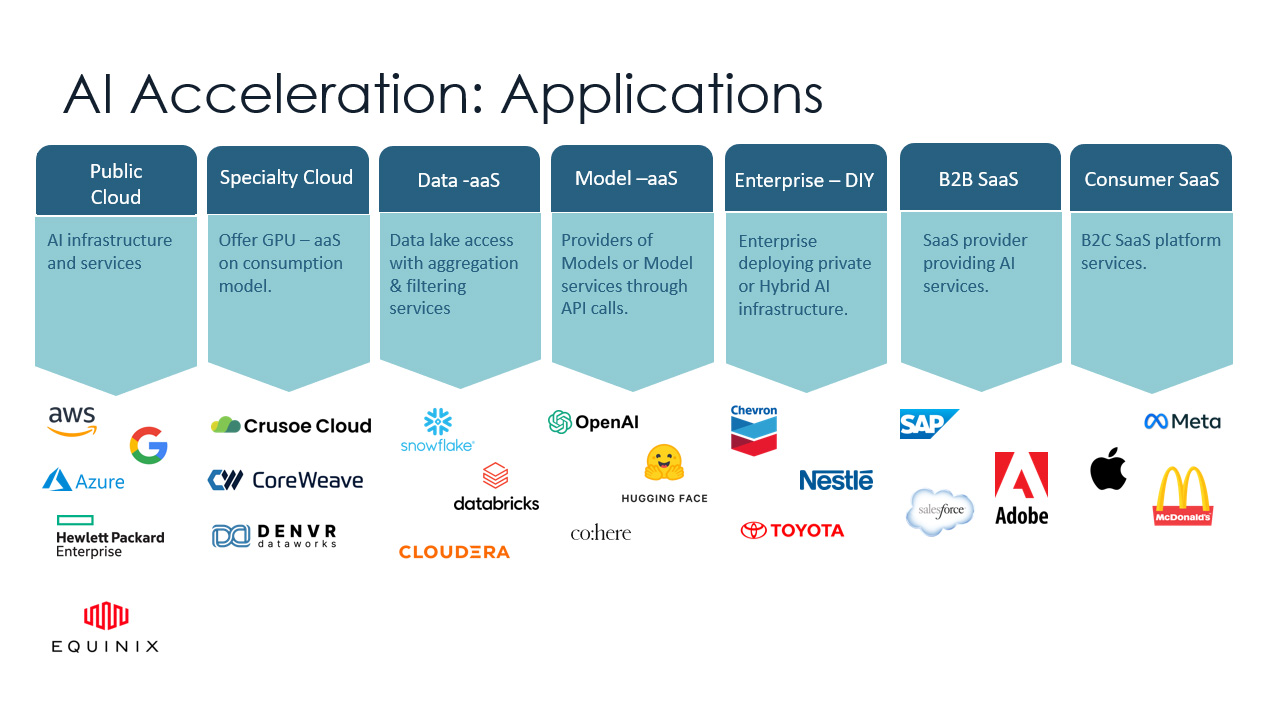

AI is prevalent, and there are many opportunities to use it. Most companies start with a single-use, small case, and then everyone wants to try something a little different, as Jonathan Harris, Transformation Leader of HPE, reminds us. This results in an unexpected increase in data access and an accumulation of data. As it is becoming expensive to use the cloud, company-wide scaling becomes difficult as internal projects increase in volume, scope, and scale. What should companies do? They generally have two solutions: use fully integrated suites or “do it yourself” (DIY). The comprehensive suites generally make more sense, especially if they allow open-source and partner integrations. Plus, providers are now using predictive analytics to offer flexible consumption solutions, which is an optimal way to manage infrastructure, costs, and efficiencies.

Closes Steve Barnett, Vice President of HPE Enterprise Sales at GDT, the AI ecosystem is developing and growing quickly, and currently there is no standardization. It is critical to have the best partner experience and the latest technology expertise during the selection of platforms, hardware, and software and throughout the fully-managed AI implementation process – from data to development to deployment. Those expectations need to be manifested today and need to be consistently maintained for the long-term. After all, these types of investments are made in relationships, not only for projects.